Color Segmentation

It can be difficult to determine the color of an object in a complex scene. Colors of nearby objects don't just reflect in each other, but neighboring colors alter perception. Here we break an image down into clusters of colors, and have an interactive example for playing with the idea.

Name that Shirt Color!

My interest here started with a Facebook post debating the color of a shirt. Most thought the shirt was gray, some though green, some brown. I had been wanting to play with scikit-image, so this seemed like a perfect starter project.

Color Spaces

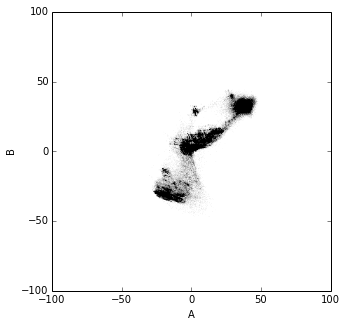

Images that we see on a computer monitor are represented with red, green, and blue pixels. There are many ways colors to describe a color though. Folks who work with print are familiar with CMYK, a 4 dimensional description. Since we want to cluster the image based on colors, and not be too impacted by brightness, we can use a color space like LAB. In LAB, there are two dimensions for the "color", and one for brightness (sorta like HSV). Thus if we look at just the A and B components (from L-A-B), we can make clusters that are not too sensitive to white light illumination.

Color Clusters

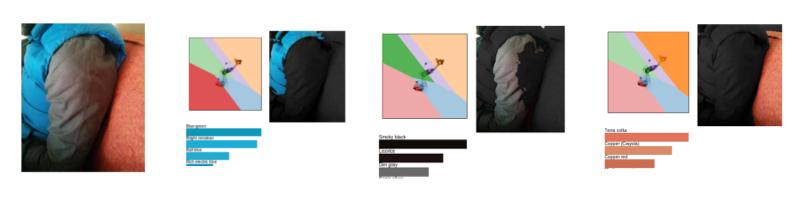

We take all the pixels in the shirt image in LAB space and take out the "L" component (which represents brightness). Here's a histogram of the A and B values:

There are obvious clusters in there, so we apply k-means to identify different clumps of pixels.

Visualization

Hover over different clusters in the histogram to see them highlighted in the image.

Okay, the Shirt is Gray...

Maybe "gray" in not a surprising answer, but the techniques here are useful in many circumstances. The color of an image is determined in large part due to lighting, but by hovering over the green segment, you can see that the grey part, which is probably receiving sunlight illumination. You probably learned some color names too (caput mortuum anyone?). Also note how we can get the reflection of the couch by hovering over that lavender component. In the grayed out version, note how much more red the couch reflection it looks. This is an instance of color perception being very dependent on surrounding colors.

Notebook and Technique

Like most posts, there is an accompanying IPython notebook for this post. You can play around with that and use any image you want, in case further shirt disputes ever arise.

The list of colors was found by scraping the Wikipedia color page. Once I had the named colors, I put them into a KDTree, and then just used that to query for the closest named color for each point in a cluster. The list of Wikipedia colors can reproduce the image fairly well, as you can see in the notebook. Going forward, I am also putting all my post-associated code into this Github repo, so check out that repo and join the fun!

Note that when you are playing around in skimage, take note of what different dtypes are being used for images. Oftentimes things were changed from ints to floats to uints by some transformation, which yielded some odd bugs. If you have troubles, that is a good first place to look.

Thanks!

A huge thanks to Stefen van der Walt. I took the clustering idea and implementation from code that he presented at pydata2012. His original code for this is here. There are more cool skimage tricks he shows in that video, so do check it out.